How to Maximize Your Company’s Generative AI Potential Using Static, Dynamic, and Recursive Prompts

Bigger than mobile. More important than the Internet. Industrial Revolution–level.

That’s how I feel about generative AI. It may seem like a bold statement for a product released only a few short months ago. The sentiment stems less from the ChatGPT product itself and more from the technology behind it: generative AI using large language models (LLMs).

Sure, it was immediately clear that ChatGPT would take chatbots to the next level, and the recent release of GPT-4 can even ace the Bar Exam (even if it is prone to false responses). But it won’t take long for this technology to far exceed today’s achievements and transform the way we work and live.

It will redefine how we interact with our mobile and web apps. It will change how developers code and how customer support operates. It will redefine the global map of leaders and followers. It will create new winners and announce new losers. It will render many businesses useless — just look how a single Redditor used it to develop a Chrome extension that can replace Grammarly, a $12B company — and it will open the door to create a sea of new solutions and companies. It will mark this decade as one of the greatest milestones in humanity.

In other words, generative AI will go far beyond changing the game; it will create an entirely new playground.

What We’re Doing at Navan

At Navan, we use generative AI — as well as ML/AI — across all functions internally, as well as within our product externally. Our virtual travel agent, for example, uses generative AI to deliver the best experience for our users.

The Navan team has also been busy building a conversational BI (CBI) that enables CFOs and travel admins to have ChatGPT-style conversations about our data and ask questions like, “How many bookings did we have yesterday?”, “What was our strongest day yet?”, and “Show me the top 20 companies in terms of NPS for January 2023.”

The result is nothing short of amazing. In fact, it works so well that we decided to enable all of our customers with CBI to get answers from their own data. And we’re just getting started. As they say, “watch this space.”

But we’re not stopping there. We’re also using generative AI to create a CBI system that goes even deeper, giving companies the tools to unlock data and insights — insights that are otherwise virtually impossible to unearth.

The secret has been to take this process from limited schemas to unlimited schemas to [drumroll] having the AI emulate a brainstorming process between the AI and itself. By doing this, we can overcome some of the fundamental limitations of working with LLMs, increase the IQ of the existing LLMs, improve reasoning abilities, and end up with amazing results. It’s something that has the potential to transform our business and, in fact, our entire industry.

Even better: With the right tools and some technical know-how, it’s something that every company can create for itself.

People who know me know that I only understand simple things. So here’s a simple approach for building your own conversational BI that has the ability to emulate brainstorming using recursive prompts. Buckle up!

How Does a CBI Work?

Many people assume that the first step in building a CBI system is to connect their database to OpenAI, but that’s not necessarily the case. In fact, the process can be much simpler.

Let me explain. Imagine a user asking a question about data, such as, "What was my company’s total travel spend yesterday?" The question is sent to the CBI service (which lives on a company’s server), and that creates a prompt to be sent to OpenAI. It prepends the user’s question with the following:

- Current date: Some LLMs don’t have the current date as part of their base prompt. The result is that the LLM interprets “yesterday” as the day before their training cutoff date.

- Table structure: Table bookings (booking_id, base_price, tax, total_price, vendor...)

- DBA guidelines: “Act as a Snowflake data analyst. To calculate “total travel,” always take base_price (do not use total price). POLICY_VIOLATION_REASON is an array”

- Examples of questions/queries:

- Input: “Show me the bookings per month for the past 12 months”

- Output: “Select count(booking_id) from bookings where…;”

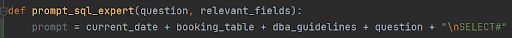

Here’s how the prompt looks in the code:

You’ll notice the “\nSELECT” that I added at the end. For some AI models, it’s easier just to complete what’s sent to them in the prompt. That’s why I added the “Select.” But for the most part, the prompt should also work without it.

Below you can see how an example is provided to the AI model in the prompt. It’s called “few-shot learning” — a machine learning technique that enables a model to learn from just a few examples. It’s extremely powerful, and the AI model responds to it very well. The more examples you provide, the better and more accurate results you’ll get from the AI model.

From here, ChatGPT returns a SQL query that can answer the user's question. The CBI system then executes the query directly on Snowflake and returns the resulting data set to the user.

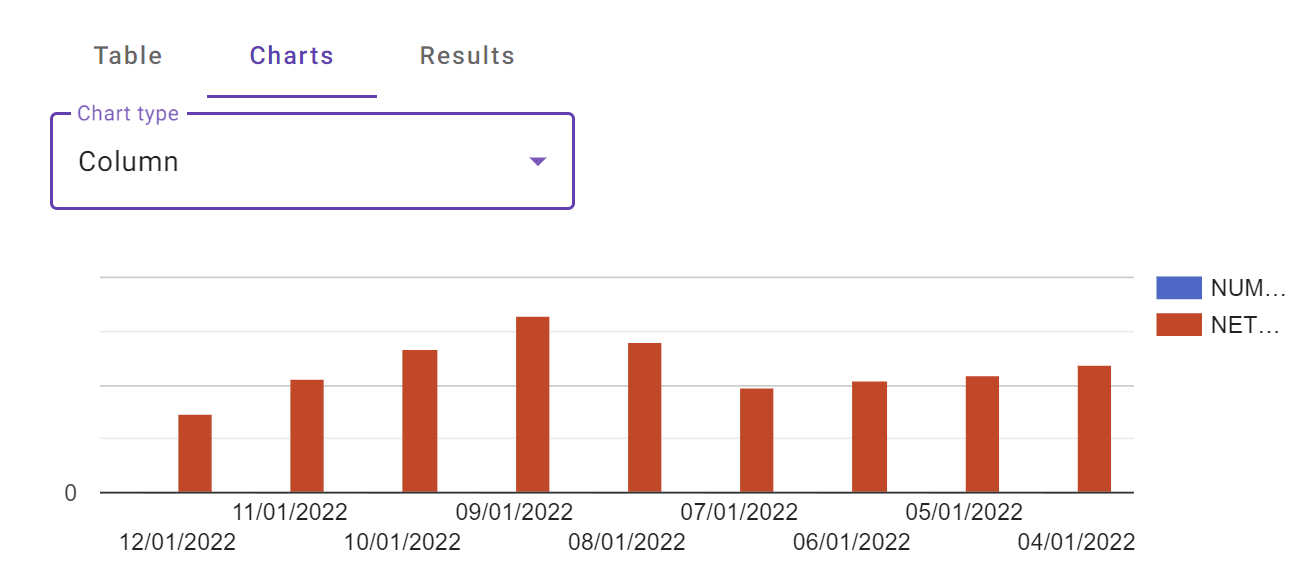

If you want to visualize the data, just prompt your front-end expert to do it. This is what it looks like in the code:

This is the chart the AI returns:

Here’s a basic flow chart of the process (thanks, ChatGPT, for generating this!):

Start with Static Prompts

Your prompts at this point are pretty static. You have the schema you’re targeting, as well as the relevant guidelines and examples. Finally, you add the question from the user.

If you want to enable conversations about data from multiple schemas/tables, you have to keep adding the tables structure, as well as the relevant examples to the prompt. At some point, however, you’ll get an error, because prompts come with a limitation on tokens.

What’s a token? In AI models, it’s a sequence of characters that represents a single unit of meaning. In natural language processing (NLP), for example — which handles processes like language generation, machine translation, and sentiment analysis — tokens are the individual words, punctuation marks, and other elements that make up a piece of text. The AI model breaks down that text into tokens (a process called “tokenization”) by splitting it into various units of meaning.

AI models each have their own token limitations. GPT-3.5 supports up to 4k tokens, while GPT-4 supports either 8k or 32k tokens. Other models have other limitations.

Fortunately, there’s a way around these limitations.

Dynamic Prompts: Achieving Scalability

The road to more robust data discoveries involves using multiple schemas, which can quickly become too long for static prompts. But you can address this issue by using dynamic prompts, where the prompt is generated based on the user's input.

This process is done with embeddings. Embeddings in AI models are compact vector representations that capture the semantic or contextual information of input data, such as words, phrases, or items, to facilitate efficient processing and learning in various tasks. We use them to find the examples (INPUT, OUTPUT) that are most similar in meaning to the question asked by the user. This way, only the relevant parts are included in the prompt. This approach allows for scalability and can support as many schemas as needed.

We used this model for calculating the embeddings and the similarities:

But there’s an even more comprehensive level of prompts — and it’s here where the magic really happens.

Recursive Prompts: The Secret to Getting Much Deeper and Smarter Responses From Your AI

“Recursive prompts” — a term that I coined — make up an advanced technique that can be used in conjunction with the existing CBI to discover unexpected insights in the data. This method involves repeatedly using the output of one prompt as the input for the next prompt. Think about it as facilitating brainstorming activity between the AI and itself — a dynamic, interactive conversation that allows for a more detailed exploration of complex data and the generation of valuable, highly tailored, personalized insights on a given topic.

Let’s say, for example, that the user is a CFO who’s looking for ways to reduce their company’s travel spend by 10%.

We initiate the process with a simple prompt:

“You are the CFO of Navan. You need to assess Navan's travel spend for 2022. You have a data analyst that can help you with getting the data that you need. Ask your data analyst questions about your travel spend in 2022.”

ChatGPT spits out ~10 obvious questions about the travel spend — things like:

What is the total travel spend for Navan in 2022?

How many bookings were made by Navan in 2022?

What is the average travel spend per booking for Navan in 2022?

All of the questions are sent to the CBI, which returns the answers. Those answers are then fed back to the CFO. This time we have a slightly different prompt:

“You are the CFO of Navan. You have data points below (questions and answers). Your main tool to control the travel spend is the travel policy that you define. Which additional question would you ask the data analyst in order to be able to suggest ways to reduce the travel spend without frustrating your employees? Remember, you are the CFO. The analyst can't suggest ways to reduce cost.”

TOTAL_TRAVEL_SPEND

98XXX93.5

COMPANY_NAME, NO_OF_BOOKINGS, NET_BOOKING_VALUE

Navan, 1xxx56, 9xxx3.5

DEPARTMENT, AVG_TRAVEL_SPEND_PER_BOOKING

Expense, 8xx.51928922

Business Operations, 6xxx.022125

etc…

Then you let it run and dive deeper into the data as it sees fit, based on the answers from the previous prompt.

By using recursive prompts, the AI can "brainstorm" with itself (the CBI) and ask questions about the data. For example, the AI can ask about the total travel spend, the number of employees who travel, and the average cost of travel per employee. Those questions are sent to the CBI, which will generate answers based on the data. The AI can then examine the answers and ask follow-up questions or drill down into specific areas of interest.

The process of recursive prompts continues until the AI can provide a detailed solution to the question — in this case, how to save 10% on a travel budget. The AI might suggest ways to reduce travel costs, such as negotiating discounts with travel suppliers or promoting virtual meetings over in-person gatherings. Since all of the suggestions are based on the company’s data, they’re tailored to and actionable for that company.

Here’s what came back when we asked our system to assume the role of CFO and examine a company’s travel spend data in 2022. Our prompt: “How can [company] save 10% of the travel budget?” After just five iterations, this is what the solution returned:

------------

Here are four suggestions to save approximately 10% on the travel spend based on the data provided:

1. Increase booking lead time for non-road warrior employees:

- The average lead time for non-road warrior employees is 20.72 days, while it is 16.68 days for road warriors.

- 28.69% of bookings are made within 7 days of the travel date.

- Encouraging non-road warriors to book earlier can lead to better deals on flights, hotels, and car rentals.

- Savings potential: Assuming a 10% reduction in last-minute bookings and a 5% savings on each early booking, the potential savings would be: 0.1 * 4409 * 577.84243933 * 0.05 = $126,957.88

2. Reduce out-of-policy bookings:

- 21.09% of bookings are out of policy, with a total spend of $2,132,216.36 and an average spend per booking of $657.89.

- Implement stricter approval processes and raise awareness about travel policies to reduce the number of out-of-policy bookings.

- Savings potential: Assuming a 50% reduction in out-of-policy bookings and a 5% savings on each in-policy booking, the potential savings would be: 0.5 * 3241 * 657.88841716 * 0.05 = $53,260.37

3. Encourage the use of lower-cost hotel chains for non-executive and non-director employees:

- The average hotel spend per night for [company] employees is $256.30.

- Promote the use of more budget-friendly hotel chains for non-executive and non-director employees.

- Savings potential: Assuming a 10% reduction in hotel spend for non-executive and non-director employees, the potential savings would be: 0.1 * 6377586.12 * (3490586.62 / 8071477.24) = $267,274.27

4. Negotiate corporate discounts with frequently used airlines and hotel chains:

- Top 5 airlines: United, American Airlines, Southwest Airlines, Delta Air Lines, and Alaska Airlines.

- Top 5 hotel chains: Hyatt Hotels & Resorts, Aloft, Marriott, Hilton Hotels & Resorts, and unbranded hotels.

- Negotiate corporate discounts with these preferred providers based on the volume of bookings made by the employees.

- Savings potential: Assuming a 5% discount on flights and hotels with these preferred providers, the potential savings would be: 0.05 * (4702144.26 + 3792270.73) = $424,720.75

In summary, these four suggestions have the potential to save [company] approximately $862,213.27 on travel spend, which is around 9.71% of the total travel spend of $8,880,860.45.

-------------

Recursive prompts unlock a much bigger potential from LLMs by allowing the AI to brainstorm with itself. The output of prompt #1 serves as the input of the next prompt, thus creating a human-like thinking process. Recursive prompts can be particularly useful in complex scenarios, such as financial forecasting, supply chain optimization, or CO2 emission optimization, where the user needs to consider multiple factors and variables. The result, not surprisingly, is more valuable insights and recommendations.

And because of their powerful functionality, recursive prompts will be a springboard to the next level of AI-driven innovation.

Looking Ahead

But it’s been an important step that’s opened the door to the future. At Navan, we’ll keep upleveling the AI with additional skills that it can use to achieve more insightful — and more surprising — results.

In fact, we already have lots of ideas on how to do just that. In my next article, I’ll delve into the direction I believe this technology is headed.

This content is for informational purposes only. It doesn't necessarily reflect the views of Navan and should not be construed as legal, tax, benefits, financial, accounting, or other advice. If you need specific advice for your business, please consult with an expert, as rules and regulations change regularly.

More content you might like

Take Travel and Expense Further with Navan

Move faster, stay compliant, and save smarter.