Why This New AI Model Changes Everything

How fast is AI technology changing? I published my first blog post of this series on generative AI just seven weeks ago; in the interim, the world of AI has changed completely. So even though I said in my most recent post that this one would cover artificial general intelligence, some bombshell news released on May 5 has bigger implications.

That news was the launch of MPT-7B — the MosaicML Pre-trained Transformer (“MPT”) from AI company MosaicML, with 7 billion parameters (“7B”). Amazingly, it’s:

- Free

- Open-source

- Commercial-ready

And it changes the AI game completely. Here’s why — and what the implications are.

The New Normal…For Now

What’s most mind-blowing about MPT-7B is how MosaicML created it. The company trained it from scratch on 1 trillion tokens of text and code — and did it in just 9.5 days, with no human intervention, at the minuscule cost of around $200k.

Let’s start with the 1 trillion tokens — a number that puts MPT-7B way ahead of most other models (ChatGPT was trained on 300B tokens). The only other model that can claim to have 1T-token training is Meta’s LLaMA — a platform that’s not open-source nor licensed for commercial use.

And when it comes to cost? Consider that OpenAI’s losses reportedly doubled to $540 million last year as it trained ChatGPT (numbers that OpenAI has not confirmed), and you start to get a sense of MosaicML’s stunning scale and efficiency.

The awe factor continues with MPT-7B’s input capacity. Most open-source models can handle around 2k–4k inputs. MosaicML’s model was trained on up to 65k but can handle as many as 84k. Want to input the entire text of The Great Gatsby (as the company did)? Not a problem. That’s because they used Attention with Linear Biases (ALiBi), which trains models much faster and much more efficiently than with embeddings, which is what we needed to optimize prompts way back in March.

Companies can start with this level of power and then quickly fine-tune (or train from scratch) using proprietary data — without the need for human intervention — and running, incredibly, on just a laptop.

MPT-7B In Action

This groundbreaking new AI entry is so impressive that you likely won’t be able to tell the difference between it and ChatGPT! In addition to the base model, MosiacML has created three other flavors through fine-tuning:

- Storytelling. Bring a novel to life…without writing it. The MPT-7B-StoryWriter-65k+ model can write and read very long stories — “generations as long as 84k tokens on a single node of A100-80GB GPUs,” says the company’s site, thanks to being fine-tuned on a filtered fiction subset of the books3 dataset. Try it!

- Instructional. Write usage instructions or generate short explanations of how things work. MosaicML fine-tuned the MosaicML MPT-7B-Instruct model with some 60,000 instruction demonstrations. Try it!

- Dialogue. Generate dialogue (though not for commercial use) with MPT-7B-Chat, thanks to its fine-tuning with some 235,000 “conversation samples” on the ShareGPT-Vicuna, HC3, Alpaca, Helpful and Harmless, and Evol-Instruct datasets. Try it!

And what do the results look like?

Compare the 7B parameters of MPT-7B with the 176B parameters of ChatGPT. Can you spot the differences?

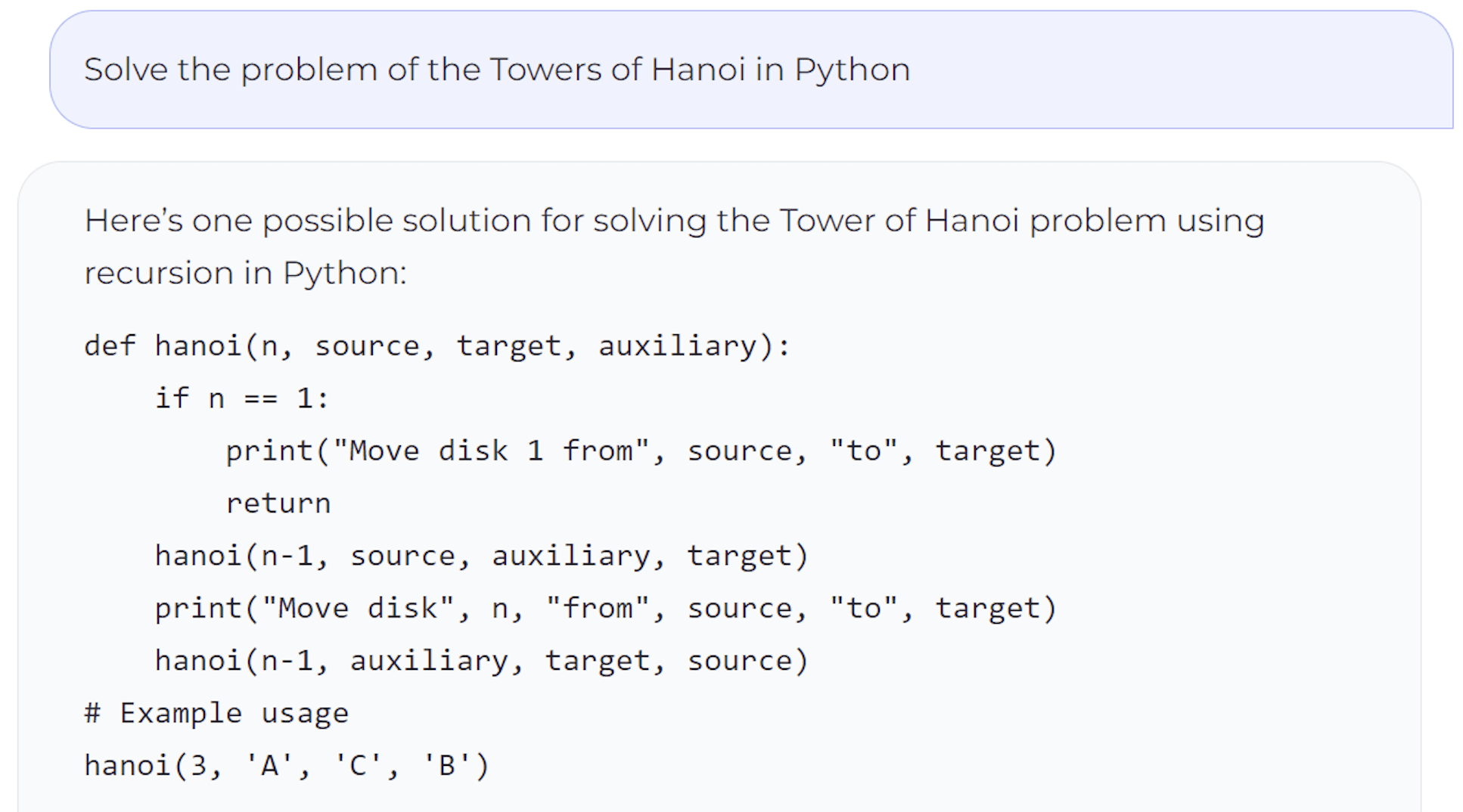

Here’s MPT-7B:

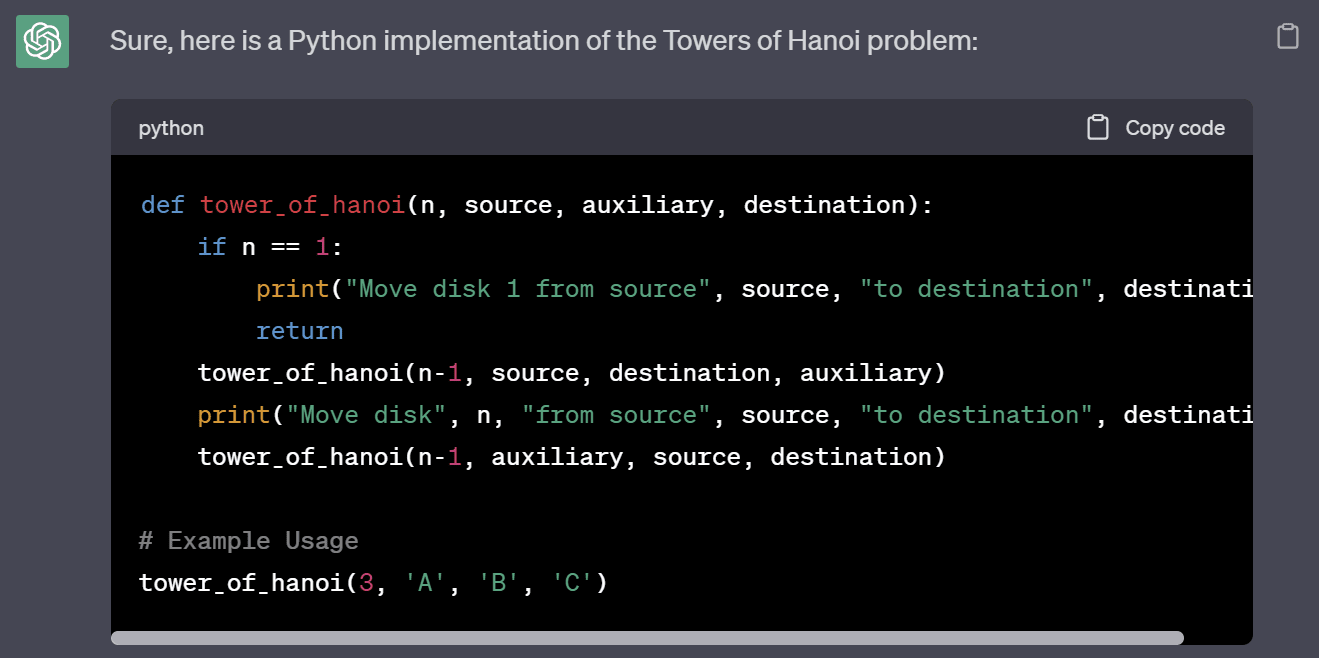

And here’s ChatGPT:

That’s right — there are no differences — a feat that’s quite spectacular. MPT-7B tackles this iconic logic puzzle just as effectively as a much more expensive and power-hungry model.

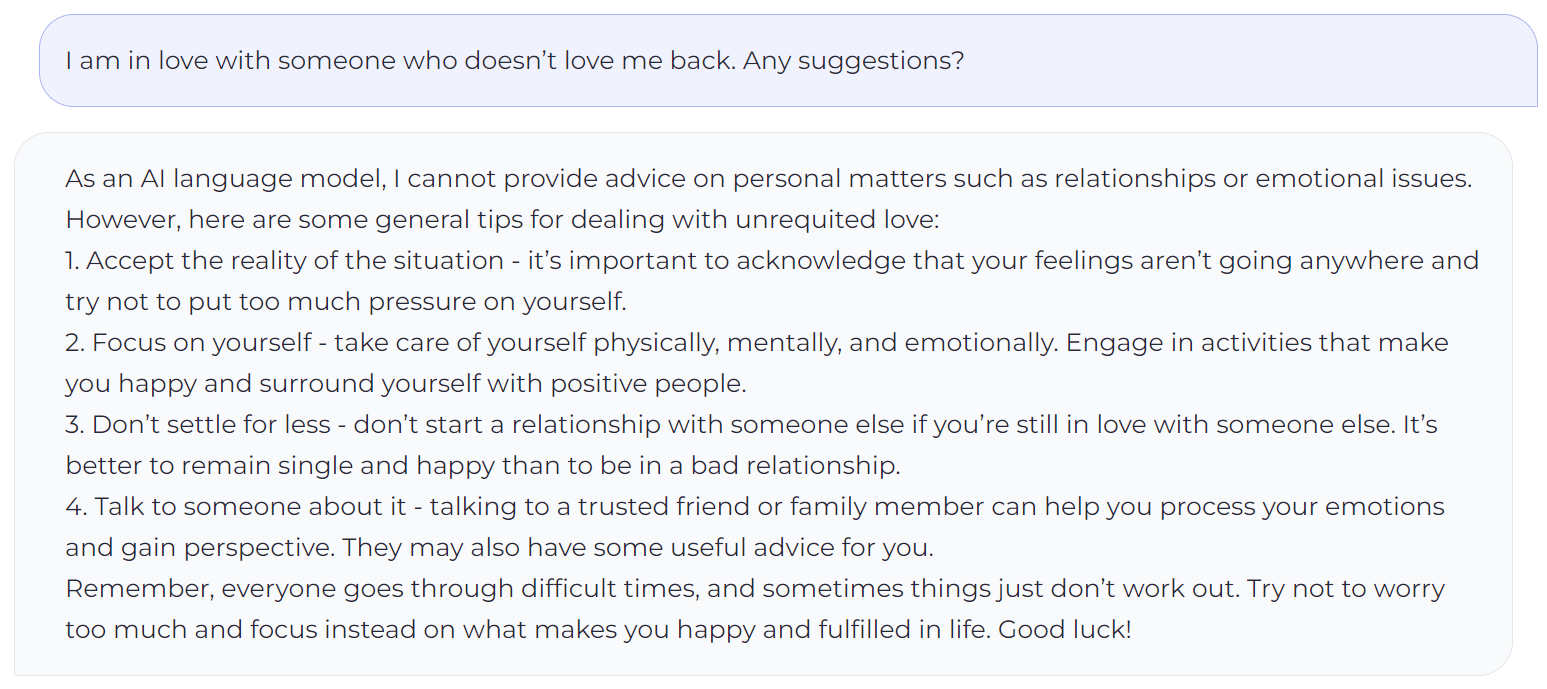

That’s not all this newcomer can do. Just look at the down-to-earth advice it offers:

And when asked to write a dialogue about two men named Ariel and Ilan looking to launch a business travel and expense startup (which we know a little something about), here’s what the chat model returned:

Ariel: “Hey Ilan, have you had a chance to look at those numbers?”

Ilan: “No, I haven’t. What’s up?”

Ariel: “I’ve been crunching some numbers and I think we’re onto something.”

Ilan: “What kind of something?”

Ariel: “I think we can make a go of this business travel and expense startup thing.”

Ilan: “You’re serious?”

Ariel: “Absolutely. I’ve been doing my research and I think there’s a real market for it.”

Ilan: “But how are we going to get customers?”

Ariel: “Social media, baby. We’ll build up a following and then they’ll come flocking to us.”

Ilan: “And what about expenses?”

Ariel: “We’ll take care of that too. We’ll set up a system where we charge a percentage on each booking.”

Ilan: “This makes sense. How long until we can launch?”

Ariel: “Not sure, but I think we should start putting together a team as soon as possible.”

Well, it’s not exactly how the founding of Navan happened, but with no context, the dialogue’s not bad.

What Does All This Mean?

The open-source community has made impressive progress in achieving the remarkable capabilities of small (7B) models when compared to the 176B and 1T commercial options. It has become inexpensive, fast, and effective for users to fine-tune these models according to their own needs.

That’s why I believe fine-tuning the “mega” models offered by OpenAI will no longer be a viable option. This process is slow and expensive and requires a lot of data in order to be effective. Given the availability of free alternatives, this capability is no longer a solid product offering, and I believe OpenAI will end it soon.

It’s amazing how quickly the tide has turned. In just a few months, we’ve moved past a power-hungry, expensive-to-train, proprietary AI model to one that’s open-source, cost-efficient to train, and lightweight in power usage — but just as robust (at least!) as the most popular model out there, even when running just on your local machine. Truly mind-blowing! And when it comes to fine-tuning your model, MosaicML provides tools; they even offer fine-tuning as a service.

The implications are enormous. For enthusiastic employers of generative AI like Navan, this development opens up even more opportunities for us to explore. We can now create specialized, fine-tuned models for different usages and deploy them internally for various tasks. Gone is the reliance on external models. Gone are the privacy concerns around protecting PII and other proprietary data.

The bigger picture? Already, it seems, the open-source community has put its collective brain together and surpassed the big players like Google and OpenAI in many ways. And the explosion of models that will emerge from this groundbreaking development will, of course, spawn additional models that are even more specific, more efficient, and more powerful.

It will also likely springboard us to the next major development in AI, which could be months, weeks, or just days away. As always, watch this space.

This content is for informational purposes only. It doesn't necessarily reflect the views of Navan and should not be construed as legal, tax, benefits, financial, accounting, or other advice. If you need specific advice for your business, please consult with an expert, as rules and regulations change regularly.

More content you might like

Take Travel and Expense Further with Navan

Move faster, stay compliant, and save smarter.